Why this matters to business, developers, and testing teams

Vibe coding, that fast and loosely directed style of change-making, can be great for trying out ideas in a hurry. But it comes with a sting in the tail.

For the business, the trouble is that rapid changes with no clear boundaries can chip away at revenue, frustrate customers, and quietly harm the brand. Developers can find themselves in a loop of rework once the defect count starts creeping up. For testing teams, the “green build” signal begins to lose its meaning when the underlying quality can’t be trusted.

The truth is that uncontrolled change isn’t inherently bad. It is a neutral fact. With the right guardrails – strong invariants, short feedback loops, and an honest view of the impact – it can be channelled rather than fought.

AI changes the picture. Unlike a human developer who will usually touch only what’s necessary, AI tends to rewrite large swathes of code in one go. This behaviour inflates the potential blast radius of a defect and makes it harder to read diffs and understand exactly what changed. If we want AI to help rather than hinder in high-change environments, we need to push it towards smaller, more deliberate edits. Big changes should be the exception, and only safe when we have the invariants, tests, and rollback capability to deal with them.

A few realistic eCommerce mishaps:

- A tiny style tweak to the checkout hides the “redeem points” option for loyalty customers.

- A banner adjustment ends up obscuring the “free shipping” threshold message.

- A discount rule change accidentally gives customers double the intended reduction.

Quality goals worth defending

“Passes all current tests” sounds reassuring, but it isn’t enough. Quality is a bigger tent, and it has a few core pillars.

- Correctness – The system behaves according to the business rules.

Example: An order confirmation email is only sent after payment is fully processed.

- Consistency – Users get the same outcome in all supported contexts.

Example: A voucher code works the same way whether you check out from London or Leeds, on mobile or desktop.

- Observability – You can spot when something goes wrong, measure it, and trace it back.

Example: An alert fires if the checkout payment step takes more than two seconds for 5% of customers in a ten-minute window.

- Extensibility – You can add features without breaking what already works.

Example: Adding a “buy now” button doesn’t prevent the normal basket flow from working.

- Testability – You can actually get to the thing you want to test.

Example: The checkout keeps stable IDs for elements and predictable API responses, so automation doesn’t break when someone changes a font size.

Observability sits above extensibility in the pecking order. You can’t safely extend a system if you can’t see what it’s doing.

Executable Specifications: The Bridge Between Business and Code

Business problems start in the problem domain language. That’s the natural language of customers, sales numbers, regulations, and operating rules.

Example: “A customer can’t redeem more points than they have.”

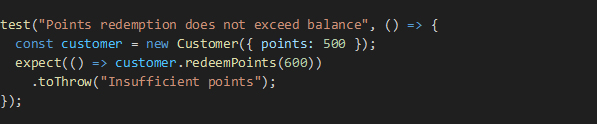

Executable specifications translate those truths into the solution domain language – precise, automated checks the system can run.

Example:

That’s not “just a unit test”. It is a running, machine-enforced statement of what must always hold true.

These unchanging truths are invariants. They deserve to be visible, explicit, and hard to ignore.

- Invariant (problem domain): A product’s stock count never drops below zero.

- Executable spec (solution domain): A property test confirming stock >= 0 after any checkout flow.

- Production truth check: Metrics and logs show no negative stock counts in live transactions, with checks running in staging, canary, and production.

Not every executable specification is an invariant. Some are replaceable rules that can shift as the product changes direction.

Example replaceable spec: “Search results should show in-stock items first” – that might change if we decide to promote pre-orders.

Why does this matter? Because invariants become the one true measure of correctness. The tests express them. Observability checks them in the real world. And feedback speed matters – catching a broken invariant two minutes after it happens is far better than two hours later. Clarity of feedback matters just as much as speed.

Closing Thought for Part 1

If invariants are the recipe and observability is the thermometer, the question becomes: how do testers shape this change environment in practice? That’s what we’ll explore in Part 2.