In Part 1, we unpacked the risks of vibe coding and AI-driven change; now, in Part 2, we shift from problems to practice — exploring how testers can shape safer, faster change environments.

How testing teams can shape the change environment

Testers are not gatekeepers. They are developers looking in another direction. The mindset is similar, but the craft of testing runs deep and needs skill in its own right.

They contribute by:

- Working with analysts and developers to pin down invariants before any code changes.

- Helping to evolve those invariants as the business shifts.

- Designing idempotent tests that always produce the same results for the same inputs.

- Making tests hard to break by accident but quick to update on purpose.

- Aiming test coverage at the most damaging failure points.

- Ensuring the system is testable in the first place with stable selectors, clean logs, and predictable interfaces.

I prefer to think of it less as “containing risk” and more as “shaping the change environment”. Risk itself isn’t the enemy. Not knowing the risk is.

Sample eCommerce invariants:

- Stock counts never go negative.

- Discounts never exceed the listed price.

- A basket can only be emptied by the user or after a successful checkout.

Sacred vs replaceable from a testing point of view

| Type | Example (eCommerce) | Test Approach |

|---|---|---|

| Sacred Core | Checkout flow, stock reservation, payments | Near-immutable invariants, property tests, contract tests |

| Replaceable | Homepage banners, category layouts, search ranking | Characterisation tests, visual regression, light unit |

These classifications change over time. A replaceable module can become sacred overnight if a new dependency ties it into the core. AI could suggest re-classifications when dependency maps change, but the final call should rest with humans.

Building quality in a high-change environment

- Write near-immutable tests for invariants.

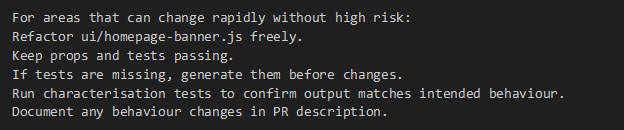

- Run characterisation tests before refactors.

- Use property-based testing for business rules.

- Apply mutation testing – changing code in small, controlled ways to check that your tests really fail when they should. This is especially valuable for high-risk areas or AI-written code.

- Use contract testing for boundaries between services.

- Add observability checks to your CI pipeline.

Integrating testing into the vibe-coding cycle

- Before changes: Define invariants and must-pass checks.

- During changes: Developers and testers work in parallel. If AI spots coverage gaps mid-change, it can suggest extra test runs – but this should be discussed, not done blindly.

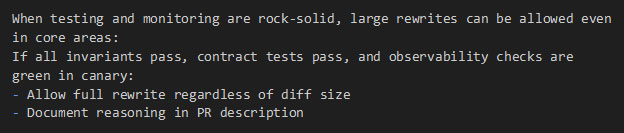

- After changes: Roll out to a small audience (canary) with metrics, then decide whether to proceed or roll back.

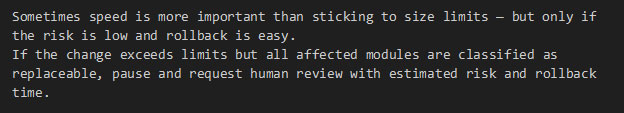

Speed matters, but timing is everything. Catching a defect minutes after a commit is far better than finding it in the morning. Rollback speed should be measured just as carefully, using a benchmark like the DORA metrics.

Quality goes beyond green tests

- Build architectures that are naturally easy to test.

- Make invariant ownership explicit.

- Keep feedback loops short and visible.

A testing-led approach to safe vibe coding

If we want vibe coding without chaos, we need:

- Invariants clearly defined and protected.

- Test coverage concentrated on high-risk areas.

- Real-time visibility into the system’s behaviour.

- Disciplined changes – small diffs in the core, more freedom at the edges.

Speed is fine, but only if detection and rollback are faster still.

How to do this with AI prompts

The safest way to use AI in fast-moving projects is to tell it exactly how to work, in a way that’s enforceable. The rules should change depending on whether it’s working in core or replaceable areas.

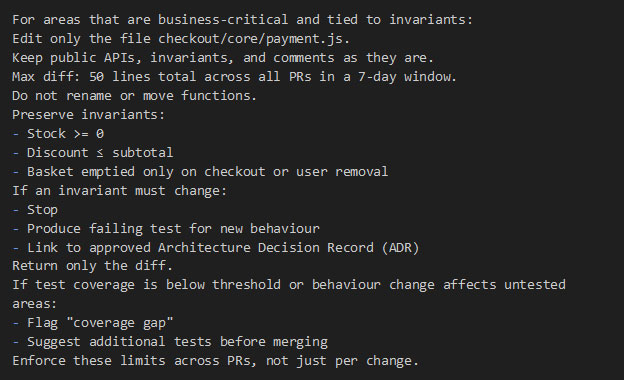

Core Modules

Replaceable Modules

Decision Overrides

Invariant-Driven Override

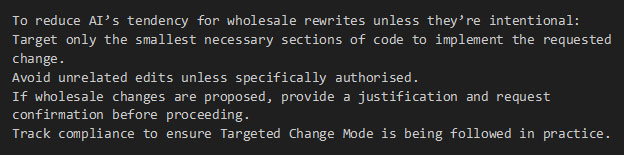

Targeted Change Mode

Prompt Guardrails

- Always list the invariants that must be preserved.

- Always limit the diff size for core modules unless an approved override applies.

- Always return a failing test before intentionally changing behaviour.

- Never touch files outside the requested scope.

Tool Spotlight: VeloAI — Your Testing Co-Pilot

VeloAI is an AI-driven assistant built for testers, and it lines up neatly with the approach we’ve outlined here. It’s not trying to replace people. It’s there to handle the repetitive and the procedural so humans can focus on collaboration and judgement calls.

What it can do:

- Check requirements for gaps or ambiguity before anyone starts coding.

- Generate detailed test scenarios and cases quickly.

- Turn those cases into working automation scripts.

- Highlight missing coverage, especially around high-risk or AI-touched code.

- Suggest unit tests to strengthen low-level safety nets.

- Work with your own domain context so outputs are relevant.

Why it’s a fit here:

- Keeps invariants safe by catching drift early.

- Speeds up test creation so feedback comes sooner.

- Directs attention to the highest-risk gaps.

- Works as an assistant under human review, not as an unchecked actor.

| OUR PRINCIPLE | VELOAI’S CONTRIBUTION |

|---|---|

| Define and enforce invariants | Requirement checks before coding |

| High-quality, targeted coverage | Gap analysis and prioritisation |

| Fast, clear feedback loops | Rapid generation of test scripts |

| Collaboration over gatekeeping | Supports testers without replacing them |

| Guided AI changes over wholesale rewrites | Produces targeted, context-aware test code |

Closing thoughts

Everything changed, but everything stayed the same. We just have a new junior team member that is amped to get going. Like before, we will have to show them the ropes.

In the end, vibe coding/AI isn’t the villain — it’s the fire.

Left unchecked, it burns through time, trust, and revenue. Controlled, it cooks the meal faster.

The difference lies in how we prepare:

- invariants as the recipe,

- observability as the thermometer,

- and skilled people (with AI on a short leash) as the chefs.

Get that right, and speed stops being a gamble and starts being an advantage.